- Click on the triangle to the left of a heading to unfold / fold.

- Links that look :like this will expand to give more detail.

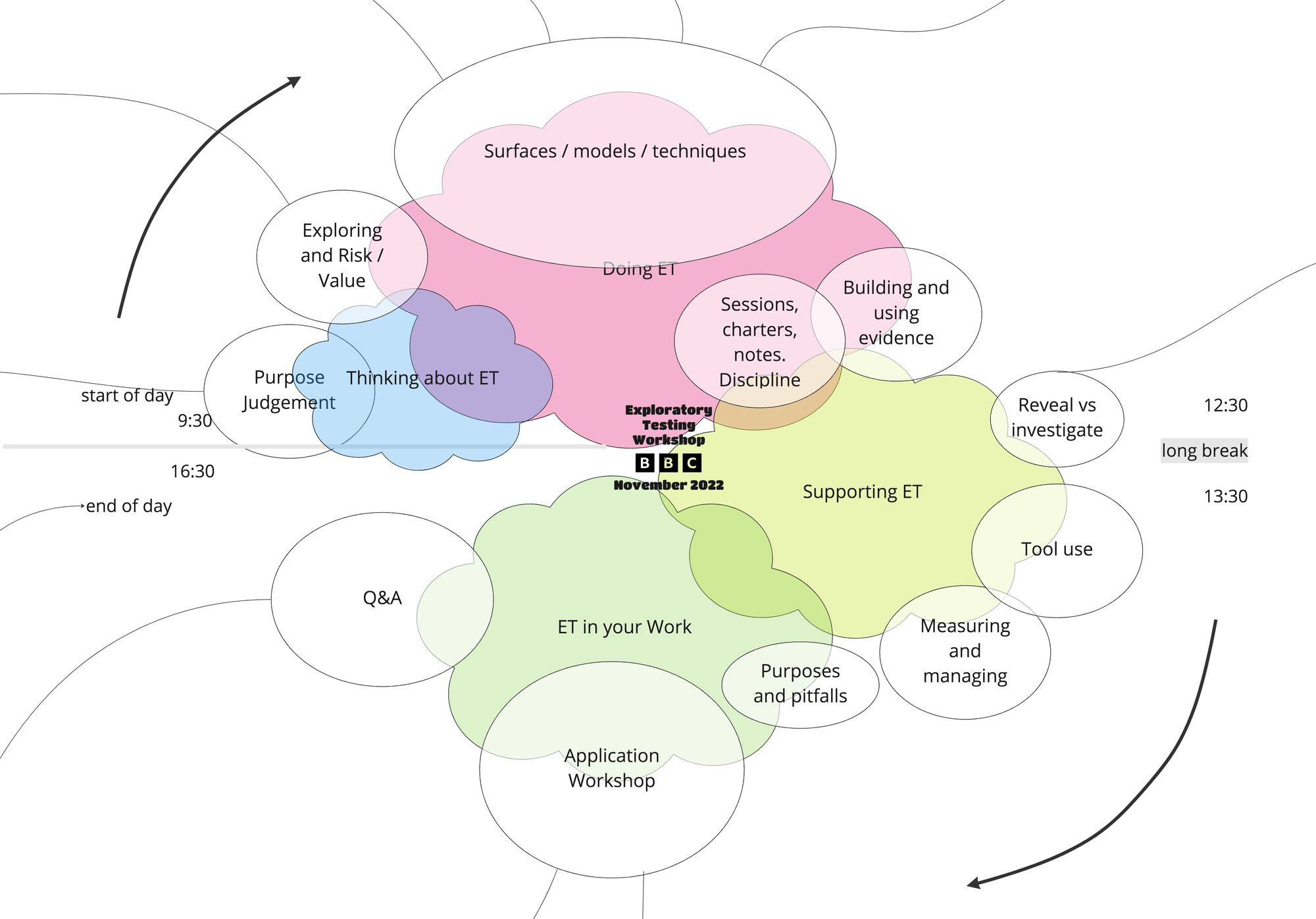

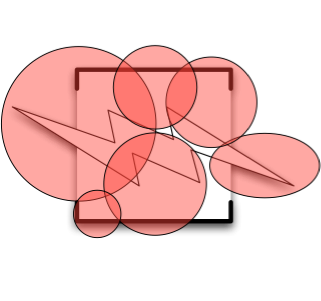

Here's the diagram of :how you voted.Do add your aims to the pile on Miro.

Here's something to play with as people turn up: Puzzle 36.

Logistics

Workshop timing

On Monday, we’ll run 9:30 to 16:30. I’ll open the zoom room at 9am if you want to talk beforehand, and I’ll keep it open at the end for a while. We’ll break around 12:30 for an hour, and a couple of times in the morning and afternoon.

Before the workshop

- Friday 4th – email to say Hello!

- Tuesday 8th – email to ask for help with content balance

- Wednesday 9th – email to ask for your own goals for the workshop, Thursday drop-in note, content balance vote closes 5pm

- Thursday 10th – 12 noon drop-in to let you check your tech and to resolve any questions face-to-face

- Friday 11th – workshop sequence and some materials available here

- Monday 14th – workshop open!

Zoom

Zoom link: Exploratory Testing Workshop – BBC

Meeting ID: 878 4425 7374

Passcode shared by email

Miro board – no longer linked

You have access via your BBC email sign-in.

But if you need the public link it is: https://miro.com/app/board/uXjVPHO_BmM=/?share_link_id=273784806086

Password shared by email

Preparing for the workshop

Equipment

This is a hands-on, online workshop. You'll need a laptop with a modern browser and an internet connection. Ideally, you'll need enough screen space to be able to see / hear Zoom, and to interact with the Miro board, and with the thing you're testing.

I'll have my camera on; please do whatever makes you comfortable, and allows you to communicate most easily with your group, or with me (if you're talking with me).

If you don't have access to a laptop, you'll be able to get some value using a tablet. You'll even be able to get some hands-on experience using a smartphone...

Working

We'll be in and out of breakout rooms for group work. We'll use Miro as a record of what we've done. Do work alone if you prefer – the exercises should have alternatives for group and solo participation. Please do join whole-group conversations.

We'll learn best from experience, and from each other, so there will be plenty of chances to share your work with the room, and to discover how others approach the problems.

Tools and technology

You do not need to read or write code, nor to install tools. You are welcome to use your own tools. For instance, you might use excel to generate data, browser tools to check out decision flow in the system under test, datagraph to analyse its responses.

If we turn to work with test design involving thousands of tests, I'll give you tiny in-browser tools to generate data, to run tests, and to gather output. I've chosen to supply most tools, and to run them in the browser, because I want to make the test system easy to access in the workshop, and to make it as accessible as possible a wide variety of testers. I've not made that choice because it reflects any particular technology.

Contact

I'm James Lyndsay. My email is jdl@workroom-productions.com. My phone is +447904158752.

Workshop structure

Exploratory Testing – Key Ideas

We'll start off by looking at exploration and how it relates to play, testing and judgement. We'll move to the idea that exploring the real behaviour of a system exposes truths that could be risks, the persistence of test artefacts, and ways that we might explore a system.

We'll return here to look at different ways to explore a system, and managing the work with session-based testing.

Purpose, Judgement and Discipline

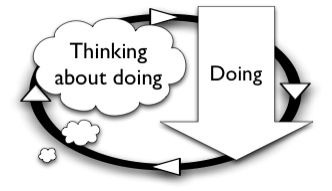

Exploration can be thought of as play guided by :intent

Exploratory Testing can be thought of as exploration guided by :judgement

:Discipline in test design helps us discover information more swiftly or more truthfully.

:Discipline in test recording enables analysis, learning and trustable evidence.

Characteristics of Exploration:

- Reliable discovery of information

- Methods have a start point, a process

- Methods may change based on circumstances

Exploring Without Requirements

We can't test without using our judgement; testing involves comparisons.

We can test without requirements – but often we're asked to restrict our judgement to situations where we're looking for inconsistency between requirements and behaviour. Testers can be uncomfortable with exploring to find out what a system does, because they feel they should start from a point of exploring what it is.

I try to help people work, with discipline, through a system's behaviours. We'll explore something to find out what it does, and from there what it is. We're not testing; we're making a model. We're not particularly judging the system, but we are judging the model we're building to gauge how well it describes the system.

Here's :more on exploring without requirements

Exercise: Exploring Without Requirements

10 minutes exploring, 5 minutes debrief.

The purpose of playing with the puzzle is to build a model of what it does.

The purpose of the exercise is to observe how you act, and how you feel, when working without requirements.

- Explore Puzzle 26 in pairs

- Observe how you're working,a nd how your partner is working. DO talk about what you've seen.

- Observe the models you build, and how you arrive at / accept / test / refute them

Debrief – let's talk. We'll use Miro if it's worthwhile.

Value, Risk and Feedback

Let's think about the time between test design and the moment when we can assess system behaviour.

Some tests, especially those which are run over and over again, can be still be useful years after they have been run. They typically track expectations of value, pick up when that expectation is not fulfilled, and hopefully run swiftly and smoothly – they persist, as a system's value will persist. They're often for a confirmatory, machine-led purpose.

Some tests are informed by the current behaviour of the system. They may be ephemeral; run once. They're typically built to look at the current real behaviours of a system, often around a newly-recognised behaviour. They're usually for a human-led purpose. They may be refined to a point where they swiftly verify value (and alert on its absence) and move into the confirmatory tests, where they're useful into the future.

Some testers explore using scripted automation to generate massive numbers of tests. The tools and code enabling that automation persist (as valuable parts of system infrastructure). The specific experiments can be re-run if necessary. The data and observations are generally discarded once they've been used as evidence.

Ways To Explore a System

A swift thinking exercise.

Exercise – How do you Explore What a System Does?

5 mins to list, 10 mins or less to debrief

- Make your own list away from the board, on your own

- Add those items to the board with partners

Debrief

- In groups or individually, if you see stuff which goes together, draw and label a circle for that grouping, and put them in

- If something you want for a circle has moved to another, duplicate it and put it where you want it. Draw a line between if you like.

- Let the groupings and their contents talk to you. If something's missing from a grouping, add it.

When activity subsides, we'll gather as a whole and talk.

I've written a :short and general list of ways.

Session-based Testing

Exploration is:

- Open-ended

- Centred on learning

One way to manage the work is with Session-based Testing. SBT tries to:

1) Limit scope and time by

- prioritising via a list of sessions,

- focussing with a charter

- timeboxing

2) Enable (group and individual) learning with

- regular feedback

- good records

Sessions and Charters

A session is a unit of time. It is typically 10-120 minutes long; long enough to be useful, short enough to be done in one piece. A session is done once.

A charter is a unit of work. It has a purpose. A charter may be done over and over again, by different people. When planned, it's often given a duration – the duration indicates how much time the team is prepared to spend not how long it should take.

The team may plan to run the same session several times during a testing period, with different people, or as the target changes.

Anyone can add a new charter – and new charters are often added. When tester needs to continue an investigation, but wants to respect the priorities decided earlier, they will add a charter to the big pile of charters, then add that charter somewhere in the list of prioritised charters – which may bump another charter out.

In Explore It, Elisabeth Hendrickson suggests

A simple three part template

Target: Where are you exploring? It could be a feature, a requirement, or a module.

Resources: What resources will you bring with you? Resources can be anything: a tool, a data set, a technique, a configuration, or perhaps an interdependent feature.

Information: What kind of information are you hoping to find? Are you characterizing the security, performance, reliability, capability, usability, or some other aspect of the system? Are you looking for consistency of design or violations of a standard

I've found it helpful to consider a charter with a starting point, a way to generate or iterate, and a limit (which may work with the generator), and to explicily set out my framework of judgement.

Charter Starters

Use these to give shape to early exploratory test efforts.

These are unlikely to be useful charters on their own – but they may help to provoke ideas, clarify priority, and broaden or refine context.

- Note behaviours, events and dependencies from switch-on to fully-available

- Map possible actions from whatever reasonably-steady state the system stabilises at after switch-on. Are you mapping user actions, actions of the system, or actions of a supporting system?

- How many different ways can the system, or a component within that system, stop working (i.e. move from a steady, sustainable state to one unresponsive ton all but switch-on)? Try each when the system is working hard - use logs and other tools to observe behaviours.

- Pre-design a complex real-world scenario, then try to get through it. Keep track of the lines of enquiry / blocked routes / potential problems, and chase them down.

- What data items can be added (i.e. consumable data)? Which details of those items are mandatory, and which are optional? Can any be changed afterwards? Is it possible to delete the item? Does adding an item allow other actions? Does adding an item allow different items to be added? What relationships can be set up between different items, and what exist by default? Can items be linked to others of the same type? Can items be linked to others of different types? Are relationships one-to-one, many-to-one, one-to-many, many-to-many? What restrictions and constraints be found?

- Try none-, one-, two-, many- with a given entity relationship

- Explore existing histories of existing data entities (that keep historical information). Look for bad/dirty data, ways that history could be distorted, and the different ways that history can be used (basic retrieval against time, summary, time-slice, lifecycle).

- Identify data which is changed automatically, or actions which change based on a change in time, and explore activity around those changes.

- Respond to error X by pressing ahead with action.

- Identify potential nouns and verbs - i.e. what actions can you take, and what can you act upon? Are there other entities that can take action? What would their nouns and verbs be? Are there tests here? Are there tools to allow them?

- Identify scope and some answers to the following: In what ways can input or stimulus be introduced to the system under test? What can be input at each of those points? What inputs are accepted or rejected? Can the conditions of acceptance or rejection change? Are some points of inputs unavailable because they're closed? Are some points of input unavailable because the test team cannot reach them? Which points of input are open to the user, and which to non-users? Are some users restricted in their access?

- Identify scope and some answers to the following: In what ways can the system produce output or stimulate another system? What kinds of information is made available, and to what sort of audience? Is an output a push, a pull, a dialogue? Can points of output also accept input?

- Explore configuration, or administration interfaces. Identify environmental and configuration data, and potential error/rejection conditions.

- Consider multiple-use scenarios. With two or more simultaneous users, try to identify potential multiple-use problems. Which of these can be triggered with existing kit? If triggered, what problems could be seen with existing kit, and what might need extra kit? Try to trigger the problems that are reachable with existing kit.

- Explore contents of help text (and similar) to identify unexpected functionality

- Assess for usability from various points of view - expert, novice, low-tech kit, high-tech kit, various special needs

- Take activity outside normal use to observe potential for unexpected failure; fast/slow action, repetition, fullness, emptiness, corruption/abuse, unexpected/inadequate environment.

- Identify ways in which user-configurable technology could affect communication with the user. Identify ways in which user-configurable technology could affect communication with the system.

- Pass code, data or output through an automated process to gain a new perspective (i.e. HTML through a validation tool, a website through a link mapper, strip text from files, dump data to Excel, job control language through a search tool)

- Go through Edgren’s “Little Black Book on Test Design”, Whittaker's "How to Break..." series, Bach's heuristics, Hendrickson's cheat sheet, Beizer-Vinter's bug taxonomy, your old bug reports, novel tests from past projects to trigger new ideas.

Writing charters takes practice. A single charter often gives a scope, a purpose and a method (though you may see limits, goals, pathologies and design outlines). You could approach it by considering...

- Existing bugs – diagnosis

- Known attacks / suspected exploits / typical pathologies

- Demonstrations – just off the edges

- Questions from training and user assessment

A collection of charters works together, but should always be regarded as incomplete. A collection for a given purpose (to guide testing for the next week, say) is selected for that purpose and is designed to be incomplete.

Writing Charters

20 minutes, groups or individually

- Pick a subject – either your own system, or one new to you. I suggest https://flowchart.fun/ if you want something to share.

- Write at least three charters – one to explore in a new way, one to investigate a known bug, one to search for surprises

- We'll talk about those charters

Further work – prioritise and run the sessions.

Timeboxing

When entering a session, the tester chooses their approach to match the time; a 10-minute session would have different activities from a 60-minute session.

Regular review

Notes take during the session are for the tester. They are reviewed soon after the end of the session, typically by a peer.

The team may choose to bolster that learning by keeping the session notes.

Metrics

I keep track of time – time spent and time planned.

I keep track of how the tester feels about the part of the sytem the tested. A proxy for that is how much time they think they might need to 'complete' testing.

I keep track of charters added, and the planned session that we weren't able to do.

I keep track of (for instance) functional area, technological target, and risk profile of a charter, to help me slice the list, and to help me describe the work.

Exploratory Testing – Approaches

In this section, we'll try different models to build, and different things to help you to explore.

A framework helps you explore a deliverable for information. They work well with software. Each of these frameworks can be communicated and checked. Each builds a model of the software, and so enables further, more directed exploration.

Different testers exploring the same thing with the same framework might make different decisions and take different steps. However, they are likely to build similar models, and to be able to understand each other's models.

Frameworks help you build a model – different ways of working with the input, ways of gathering and processing output can lead you more-easily to the information you need to build those models. Exploring different artefacts of a system leads you to deeper understanding and sometimes to different models. We'll try examples of these approaches.

We'll also spend time introducing session-based testing, and looking at notes. Written support for those parts can be found below.

Exercises

We'll use Converter_v3 as a test subject for many / all of these exercises. Please ignore the sidebar until we get beyond the first few exercises.

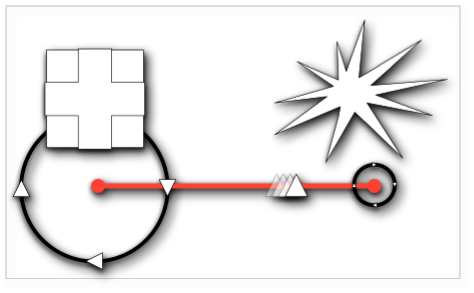

Framework: In / Out

The In / Out framework is a discipline to help you explore and identify inputs and outputs.

It’s up to you what you might call an input, or an output. Some people use objects, some people use verbs, some people use verb-object, or verb-object adjective. Some people draw pictures, or highlight a screenshot. Whatever you use, try to group and generalise. As you identify more and more, try to extend the groups you have identified, and include more items if you can. Think about things you might not have included in your groups. Consider new groups, or alternative ways of classifying. Look at the ways you differentiate between members of each group. You’ll grow long lists.

When you’ve got lists, try to identify those items which are linked. Think about how the links work, how you can label, generalise, extend and differentiate them.

This framework helps you focus on data and transformation. If you find yourself considering the function or use of the thing you are exploring, that’s fine, but be aware that you can choose to return to the disciplined framework.

Exercise: Input – Output – Transformation

5 minutes, then debrief

- Using just the text field, consider what your 'inputs' might be.

- Using just the output field, consider what your 'outputs' might be

- When you've made some experiments / observations, take stock. Try to group your 'input's in several ways. Try to group the 'output'. What can you see that is a useful grouping? Can you see any patterns? What experiment or condition might be missing within a grouping?

- We'll talk about what you've seen

- We'll suggest and check connections between inputs and outputs. What is transformed?

Debrief – publish your notes on Miro. Check out other people's work. Can some understanding be communicated in notes?

Exercise: What did you use?

2 minutes, then debrief

Have a look at the page's records of your activity?

- How does looking at your actions make you feel?

- How do your actions change over time?

- Can you pinpoint a reason?

Debrief – add notes to board as you think. Share verbally, voluntarily.

Framework: Behaviours

The Behaviour framework is a discipline to help you explore and identify the different ways that your subject works. You’ll identify behaviours and places where / when those behaviours change.

When we think in terms of time, we recognise that behaviours tend to last until an event. Events punctuate behaviours. What persists? What changes? What marks the change?

When we think in terms of a range, a behaviour is shared by all members of a group within that range. Perhaps we'd think of boundaries in that space, rather than an event in time.

It’s up to you what you might call an event, a boundary, or a behaviour. You’ll probably need to write a few things down before you start to group them. Use your exploration to help you identify and differentiate the items in your lists more clearly.

Once you have a collection of behaviours and events/boundaries, try to identify and isolate the relationships between different behaviours, and the ways that the events/boundaries can mark the transition. Can some groups be isolated? Do some seem more important than others? Allow your exploration to guide you to answers to these questions.

Think about what triggers the change in behaviour (you? another system? chance? a combination of factors? an undo? a bug?) and what happens when that trigger is followed swiftly by another.

Exercise: Behaviours and boundaries

5 minutes, then debrief

- Consider your input as a range

- What boundaries can you find where the output changes in some way?

- How do you distinguish between different ways that it changes?

- Explore behaviours around

just underandjust over - Are those behaviours shared for different units?

- We'll talk about what you've seen

Debrief – publish your notes on Miro. Check out other people's work. Can some understanding be communicated in notes?

Different means of input

If you're testing the UI, use the UI. Maybe write a test system to lie the other side of the UI so that you can manipulate the UI from both sides.

Slider I

If you're looking to test through the UI, and investigate the deeper system, then let the UI help, but don't get stuck on it. In the next exercise, you'll switch to changing the input swiftly and perhaps carelessly.

Exercise: Input with a Slider

3 minutes, mostly debrief

Choose the radio button labelled "slider"

Work with the slider to reveal more about the system under test. Do you need more than a minute?

Debrief – What's different about your interactions with the system under test, when you're using the slider?

Slider II

In this exercise, you'll use a slider whcih lets you set the ends. You typically woudn't find this on a UI, but it will push you to think about test design.

Exercise: Input with a Parametric slider

3 minutes, mostly debrief

Choose the radio button labelled "parametric slider"

Choose the start and ends of the slider. Play with the slider. What have you found?

Debrief: Share your slider ends (and perhaps why you chose them). Share what you learned about the system under test.

Pre-filled bulk input

In this exercise, you'll work with a list of inputs.

Exercise: pre-filled input

Use the pre-filled list to see how several values are converted. Feel free to change those values.

Debrief questions:

- Do you see any surprises – reasons to change the model of the underlying system which you build in the previous exercise?

- Do you judge any of the outputs to be wrong? What are you basing that judgement on?

- How did you change this collection of tests? Why?

Exploring the system's artefacts

Your exploration will be better-informed if you use information about the system to help you interact with the system. This system has tests, story, examples, release notes, configuration data and reliable external sources.

Exercise: Information about the System

7 mins, then debrief

Check out the story, the examples, the tests.

Do use the system as you work through these artefacts

Debrief question: what have you found out about the underlying system?

Exercise: Information about Change

7 mins, then debrief

Read the release notes.

Debrief Question 1: Does this change your understanding of the system? What now catches your interest?

Debrief Question 2: With a real system, what other artefacts might you seek out?

Exercise: Configurations

7 mins, then debrief

Consider the configuration information

Debrief question: Does this change or add to your understanding of partitions? Of system behaviour?

More Frameworks

We won't talk about them today, but you might use:

Framework: Making Sense

This framework is best when exploring something that solves a clear problem for a known audience. It goes well with UX work.

Software is an artefact, a made thing.

Most software exists because somebody thinks they need it.

We try to meet that need by making systems around software.

We know that demand comes from those who pay for, use and value the resulting system – but those people don’t value our software. They value what it does, the collection of behaviours of the system. This collection is an emergent property of the made thing, not a made thing of itself.

We also note that a system is also shaped by the needs of its makers and their technologies, by the tactics of its exploiters, by the shape and form of its data, by the environment in which the software exists to provide the basis of a valuable system.

When we explore the actual behaviours of a system, we see all these – and more – together. We may find problems where the different drivers and shapers work against each other.

In this framework, we manipulate a system and observe its behaviours. We consider whether those observed behaviours make sense in the context in which the system is valuable. We consider whether those behaviours might instead reflect the other demands on a system.

We try to make sense of what the deliverable does.

Framework: Making a Map

This framework is handy for working through software which needs to be navigated, or where the user moves through states, or where there is some sortt of a conversation. It's also helpful when testing from the point of view of a data entity, or an external system.

Exploring and learning is making a map.

A map can tell you where you’ve been , expose where you have to go, gives you an artefact that can be shared.

In this framework:

- we’ll decide on a fixed base point

- we’ll decide on a step – a single repeatable action that can be taken in different ways.

- we’ll decide what those different ways might be. If they were directions, they might be forwards, backwards, left, right.

- then, starting from the base point, we’ll work through all the directions, taking one step in that direction, seeing what’s there, and returning to the base point before taking the next.

That’s the method. But you can write down a map in many different ways. The choice is yours...

You might want to consider: How are you labelling? Does direction have a meaning? Does distance? What relationships are expressed in space? What do you assume?

Diagnosis vs discovery

Diagnosis involves exploring a known behaviour. You'll typically be adjusting known factors to reproduce a problem, then reducing those triggers to see what it relevant or irrelevant, and to see how the noticed behaviour changes.

Discovery involves searching for surprises. You'll typically be trying lots of different factors to see what happens. You may have a model in mind; error guessing, known attacks, prior problems can inform your work. You'll often have your observations turned on – you'll try to be aware of transaction and monitors, logs, data contents, system history, the people involved.

Diagnosis

None. The tester tells you that the room is dark.

A good tester will need to do more than simply draw attention to a problem.

A doctor needs to understand anatomy, physiology, and :pathology to diagnose an illness from symptoms.

A tester needs to understand architecture, system integration and potential bugs to diagnose a problem from how it might be presented.

A problem can be far worse than its symptoms. Similarly awful symptoms can arise from otherwise innocuous problems.

Diagnosticians need to consider proximate and ultimate cause, chains of cause and effect, necessary and sufficient causes.

However, they must also acknowledge that cause and effect are not necessarily discrete, linked in an obvious way, or even identifiable individually. In systems where there is feedback between cause and effect, diagnosis is easily-biased towards the interests of the diagnostician. A given diagnosis may be useful, but may not be right.

:x Diagnosis and Pathology

Doctors include psychology on this list; the influence of the mind over the body. I’m not sure of the analogue for testing.

Discovery

Noticing things is ôdd. You may see something several times, before you notice it. You may need to gather plenty of information and push it around, for a while, before an underlying explaining pattern reveals itself to you. You may need a sleep, or a walk.

As exploratory testers, we have the pleasure of making regular novel discoveries. This exercise / toy lets us experience that accessibly.

Raster Reveal

Exercise: Raster Reveal

1-minute exploration, short debrief, repeat

Explore the image: move over parts where you want to see more.

Then we'll talk about it. Some questions:

- When did you know what you were looking at?

- How did you know?

- Did you think it was one thing before revealing another?

- How could you describe your actions? Would your actions transfer to another person? Another picture?

- What role do your knowledge, skills and judgement play in exploring this?

For more on this, see :Dual Process Theory, and :Kahneman's Thinking Fast and Slow.

Notes and Reports

Some test teams standardise on one note-taking approach; a massive mindmap, a shared one-note, rich-text on a session-based Jira ticket, a set of sequential blank books.

Most of the time, exploratory testing ntoes are kept privately for the tester who tested. Plenty of testers rely on their memory.

Conversation: Making Notes

- Why might we record?

- What do you record?

- How do you keep records?

- What do you share? Why?

We keep notes to help our minds stay available in the present, and to support other minds – our colleagues, ourselves in the future.

- Remember – Mnemonic help

- Review – Sharing, improvement

- Return – Historical analysis, long-term project memory

It’s key to record decisions. But you might also record...

Identifiers:

- who

- when

- what

Qualities:

- risk

- estimated time

- dependencies

As you go:

- actions

- events

- data

- expectations

- bugs

- plans

- interruptions

- actual time taken

- time wanted

- problems

Sharing: Some Notes

We'll pick a long exercise, and run it specifically for notes.

Then we'll compare.

Supporting Exploratory Testing

Styles of Managing ET: Common approaches

These seem to arise naturally

- Stealth Job – Unacknowledged, unbudgeted, heroic, deniable

- Bug Hunt –Enthusiastic crowd, little learning

- Gambling – Kick off by risking an unknown outcome

- Set Aside Time –Next steps after making a budget

- Off-Piste (Iron Script vs Marshmallow Script) – You’ve worked so hard to make them

- Traditional Retread –Managing discovery work with construction metrics

- Script-Substitute – Run out of time? Surely ET is the answer!

What specific tasks suit ET’s strengths?

Checking a risk model – Improving unit testing – Diagnosis of beta-test bugs – Emergent behaviours in integration – Getting out of a rut – Discovering what needs to be tested – Looking for security exploits – Experiments in performance testing ...

Overall strategy:

- Core practice (tool-supported)

- Bug hunting (reactive, diagnostic, path-finding)

- Exploration time (regular gamble)

- Blitz (focussed, brief)

- Sole practice (only exploratory, no pipelines, unit tests etc...)

Where in the Project?

- Pre-delivery – preparation, toolkit, scope

- Ongoing delivery – testing, learning

- Approach to live – emergent behaviours

- Beta + live – diagnostics

Common Pitfalls

- System not ready to be tested

- Faulty (ET copes but may not reveal systematic failure) – ET just because there’s no documentation / no time – Functionality masked by existing problems

Often forgotten:

- Learning process – Tool use – Discipline

- Hard to make ET work when: Spread too thin – Not directed / controlled – Bugs are the only record

- Easily-broken Expectations: Every possible permutation – Only bugs and pass/fail need to be logged – Continuing with an unproductive approach

- Mind the edges: Too many bugs! – Reviews take time – Fun

Supporting Testing with Exploration

We help grow good systems by giving swift, relevant and true feedback.

- Swift – to allow control

- Relevant – to allow us to use control to steer

- True – to steer accurately towards something real

Conversation: How can your Exploratory Testing work supply this?

10 minutes, miro + voting

Conversation: What does 'Good' exploratory testing look like?

10 minutes, miro + voting

Tooling

Exploratory testing without tools is weak and slow.

If you're testign a UI, use a UI testing tool. If the thing you're testing has a UI, but you're testing through the UI, find another way.

Automate environments, APIs, database access, file access, permissions, data creation / comparison, set up, day-to-day admin in preference to brittle UI automation.

Exercise: Tools Workshop

10 mins until done

Someone adds a tool to the Miro board, then describes why they use it

While they're talking, someone else adds another

The conversation moves on to the next person

...and so on

Observation tools

- database contents, environments, match/compare

- object browser, text stripper, mapper

- screen capture, keylogger, transaction logs, system logs

Action / setup tools

- test harness, replay tool, macro tool, data loader

- generators for data, transactions, load, system messages and events

- emulator / simulator

Analysis tools

- code analysis and verification

- unit tests, coverage

- data analysis, patterns and clusters, graphing

Testing, Gambling and Wicked Problems

Seeking surprises is a :wicked problem. Exploratory testing needs time and money – and that spend looks less like an investment, and more like a gamble.

To handle a gamble, you might;

- Shorten the odds

- Spread your bets – increase diversity

- Limit losses

- Reduce your minimum stake – easy setup (fast in, fast out)

- Increase your return – usable information is swift, relevant, clear, reliable.

Know that Risk ≠ Uncertainty. Uncertainty makes us freeze.., but some people rather like the right risk.

Disciplines for Gamblers

- Get better at triggering, better at observing to shorten the odds

- Employ widely-different approaches to catch a broader selection of easy information

- Box time and scope to limit losses

- Use tools to reduce fixed costs and increase scope.

- Build knowledge and empathy to make your information more useful

Get comfortable with being temporarily uncertain

Opportunities for Organisations

- Invest in training, expertise and diversity to improves the odds

- Identify fixed costs that can be reduced with automation – but avoid reducing perception.

- What reduces the costs? Speed and automation. These can also reduce perception that work is worthwhile.

- Be able to speedily recognise and reject un-profitable approaches.

Allow temporary uncertainty.

:x Wicked Problems

You may be able to write down all possible combinations of input. All sequences for those combinations. All the elements of your system under test. All they ways they interact. All possible environments. All possible states. All possible data. All the ways you might imagine triggering the system. All observations that you can make with your current tooling. All the known problems. All the known pathologies. Every bit of value that your system has to its makers, users and their customers. Every hindrance it puts in the way of those who wish to harm your organisation, the system, or those it serves. Every edge case and stressful situation it might encounter.

There’s a lot of stuff there, much of it useful. Can you find all possible behaviours? Can you find all possible bugs? “No” is not a bad answer – it’s a true answer.

A wicked problem is a problem that is impossible to solve, rather than hard.

- Wicked problems are not understood until resolved.

- Wicked problems have no stopping rule.

- Solutions to wicked problems are not right or wrong.

- Every wicked problem is essentially novel and unique.

- Every solution to a wicked problem is a 'one shot operation.'

- Wicked problems have no given alternative solutions.

(after Conklin)

You can tame parts of a wicked problem. We’re tempted to work only with the tamed parts.

Assurance

Let’s assume that you're being asked to set up some sort of assurance without following the rails of a pre-existing culture. We can all dream.

In that case, I would advise you to work in a way that expected 'assurance' to independently assess the degree to which information coming out of a process of work could be trusted, and the degree to which the organisation as a whole trusts it. I’d expect assurance to be a sampling activity, with access to anything but without expectations of touching everything.

For exploratory testing, I would hope to:

- Watch individual exploratory testing activities to judge whether execution was skilled (I’d want a wide variety of business and testing skills on display)

- Watch the team to judge whether their exploratory testing work was supported with tools and information (exploratory testing without tools is weak and slow, exploratory testing in the dark is crippled)

- Gauge whether the team had independence of thought, and to what degree that independence was enabled and encouraged by the wider organisation (bias informs me about trust)

- Read some of the output (reports, notes, bugs) and watch some debriefs (if any) to judge how well the team transmits knowledge about its testing activities.

- Follow unexpected information to see to what extent it was valued or discounted (exploratory testing finds surprises; is that information useful and treated as such?)

I’d hope to do the following less-ET-specific tasks, too.

- Dig into any points where information could be restricted or censored (ie inappropriate sign-off, slow processing, disbelief or denial)

- Observe the use and integration of the team’s information in the wider organisation to judge whether the work was relevant, and accurately understood

- Judge the team’s sense of direction by observing the ways that information found, lessons learned, and feedback from the organisation affect the team’s longer-term choices.

I hope that your organisation will use these insights to help jiggle whatever needs jiggling. I hope that, that once jiggled, the organisation feels that it can trust the information from the team even more, and that the team would feel even more relevant and valued.

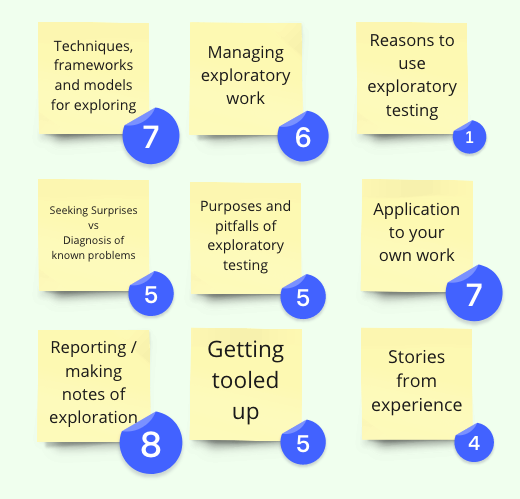

Exploratory Testing in your work at the BBC

I expect that this will take around an hour of our workshop, from 3-4pm.

This is an open-forum section of the workshop. In it, I hope that we'll talk about your opportunities to build on the things you've done here, in your own practice.

I expect we will work in groups that align with the things you are testing, or the technologies you use. You are welcome to ignore my expectation.

I want groups to look at what (at work) they want to address, and what (from here) they might apply. You'll put notes in Miro.

I hope we'll share that context – and I hope that everyone will feel welcome to exchange ideas on how these ideas might apply to your work. If an idea seems worth remembering (even if it's obvious), add it to the Miro board. If you'd like to make a commitment to do specific work by a specific date, use the board to make that information public to the workshop.

We'll close this section by looking at ways that we might expect to assess that work has gone, and how we might support and change to find a useful path. Again, if an idea seems worth remembering (even if it's obvious), add it to the Miro board.

Closing

This section serves to consolidate ideas, and will let us extend into areas that you'd like to work with.

We may have deferred some questions, and you may have more to ask. We'll use the Questions area in Miro to keep those. If we're down on time, we may vote on where to spend time.

Finally, I want you to share two things that you'd like to remember from today. Sharing your memories will help remind and reinforce everyone's memories.

Sprue

Puzzle 15

Schedule / Workshop Description

Core workshop, skipping some techniques and processes

- Seeking risk vs verifying value

- Exploration + judgement

- Discovering models

- modelling the ways that data is transformed

- modelling the state

- mapping activity, behaviour

- Working through the UI

- Tooled-up, hands-on

- Recording and reporting

- Styles of exploratory testing

- Uses and anti-patterns

Activities

Raster Reveal

Rater – one field

Rater – full environment

Video

Words

:x From here on, it's all hidden stuff

:x How you voted

:x Show Off Expand

I'll generally use this to show more details, sometimes from other pages on this site.

To close, click on the link again, or on the x in the bottom centre of the callout box.

:x Intent in play

See Alan Richardson’s Dear EvilTester, book, chapter “What is exploratory testing?”

Alan calls this intent, I tend to say purpose

:x Discipline in test design

Building experiments purposefully, and taking action whose purpose is clear.

:x Discipline in test recording

Making the intent of one's actions clear, whether that is action taken in the moment, action captured in a script or automation, or an action that can only be taken with automated assistance.

Recording observations in a way that is reliable and broad, and analysing system behaviours in the light of that evidence.

Making hypotheses and working models clear, and staying alert to the possibility of disconfirming evidence, and whether to find time to seek it.

Exploration and variety

You’re all exploring similar artefacts in the same place at the same time

There is a limited set of things to find, but an unlimited set of things which could be found. Different people, using different techniques, find different stuff.

If it’s possible to limit the discoveries to stuff that already exists, and you have enough people, time and approaches...

Many people, using varied techniques, will find more stuff

Note: Some people, some approaches, will find stuff faster or more easily, or will find more valuable stuff.

Note: We’re looking for bugs, not stuff. Information, not objects. Does the analogy still make sense? Can we count bugs? The change in perspective is fundamental, but not crucial.