Testing and AI – Series 1

This 6-week series runs on Wednesdays, 2pm London time (15:00 CET), on July 12, 19, 26, August 2, 9, 16.

One tool a week. We'll see where the wind takes us. I've got TeachableMachine, StableDiffusion, Cody, ChatGPT in mind – you'll see that I'm tilted towards generative AIs.

This series is free. If you want to make a financial commitment, please help Jacob Bruce or donate to DEC.

New signups are closed. I may run another series in the autumn.

Guide

This series is intended to be a space where testers with an interest in AI can come talk, play, and learn from each other. I'll provide exercises to help us have some shared experience, but I won't be your AI expert.

How we'll work

- Cameras on, mics muted if it's noisy your end.

- Be kind. If you're unkind, I'll mute you.

- Share your insights.

We’ll meet on Zoom at 2pm London time. Here’s whatever that time will be where you are. Probably. I'll be online about 10 minutes early if you want to drop in, check your kit, say hello etc.

We’ll use the web page for materials, the Zoom meeting for face-to-face chat and text chat, and the Miro board as a space to collaborate. All three should persist for the life of the series.

If you're not a subscriber, you won't see stuff below the fold – but I'll put links you may need into the Zoom chat and on the Miro board on the day.

Week 1: TeachableMachine

Here's TeachableMachine – a web-based, trainable AI. We'll try training it, and talk about testing perspectives. It's not a generative AI, but it's capable of being trained to do interesting things, it gives immediate feedback, and it gives limited control and insight over the training process.

We'll talk, play, test, and talk again. We'll use miro to gather around interests, and I'll set up breakout rooms so you can chat in smaller groups.

Examples

Note: The links are to google drive – you should have read access, but may need to copy. For the models, look for the open in teachablemachine button.

Shapes and Colours

Here's model A, which can tell the difference between red, blue and green, and circles, triangles and squares. Here's the collection of shapes I used to train it.

I wanted to see whether one could train in multiple classifications (colour and shape), and whether one could train with very few examples.

The testing set in the collection of shapes was not used for testing-while-training, but was used afterwards to see how the models managed with stimuluses outside the training set – different colours, distorted shapes.

Here's Model B which I trained on the same data, and trained differently.

Testing ideas

- how does A (or B) work with colours and shapes that you show it in the camera?

- what's the difference in performance between A and B? What's the difference in training (hint – the data is the same, look at the

advancedoptions for training)?

Example: Face direction

Here's a model that judges head-on or profile.

Testing ideas

It's trained on me – how does it work on you?

General testing ideas (copy to Miro if interested)

Train the machine to differentiate between two objects. Judge how good it is at telling the difference.

Train different models on the same data, with tweaked training parameters. Watch the graphs in under the hood to get an insight into your changes. What have you learned?

Reuse data for several different classes – i.e. use a red triangle to train red and triangle. Does your model see just one class at a time, or several?

Try to introduce a demonstrable bias into your data. You might (for instance) train on green triangles, and red circles - and see whether your model distinguishes green from red when you show it a green circle. Or train with something irrelevant in view, then remove that thing when you use the model. Share your examples and insights.

How little data can you use to train a model to (say) distinguish between two colours or two shapes? Share your conclusion.

Can you make a model worse in some what by adding a class and re-training? Share your examples.

Look in Training : Advanced to fiddle with parameters for training. Share your insights.

Look in Advanced : under the hood to gain insights into measurable qualities of your model – how much data was used for training, the 'confusion matrix', accuracy and loss. What do those tell you about your model?

Look in Advanced : under the hood to gain insights into how the metrics changed as the model went through training. What do those tell you about the training?

Caveats

- TeachableMachine doesn't generally work on mobile or tablets (on my devices it shows blank, and here's a bug report on an Android device). Here's an iOS app, which is restricted to images only, and is slow to process those images (though on my kit, faster to train than my 2012 MacBook Pro). I've not gone looking for an android app.

- The instant-feedback from a live webcam doesn't work on some browsers (notably my outdated Safari v13). Works on Chrome v113. Without that, training the thing works, but using it is c l u n k y.

If you're interested in digging deeper, here's a video tutorial on TensorFlow.js, which not only gives an immediate example of TeachableMachine, but goes rather broader and deeper, while still being accessible.

This short article by Bart vanHerk, The Power of the Confusion Matrix, helped me to understand one of the key measures that can be extracted from an AI as it is trained. It gave me a further testing ideas: can you build a biased dataset and analyse the confusion matrix to confirm, that bias? Can you see similar biases in existing TeachableMachine models?

Week 2: StableDiffusion → DiffusionBooth →JamesBooth

StableDiffusion is a generative AI which transforms text input into pictures.

DreamBooth is a way to fine-tune StableDiffusion with images of a specific person, so that it can produce images that person.

JamesBooth1 is an AI trained to produce pictures of James. BartBooth1 is an AI trained to produce images of Bart.

We have the capability to generate many pictures of James or Bart. As testers, what can we judge about those pictures? What are we judging against? What does 'quality' even mean?

In this sequence of exercises, we'll try to build a model of quality in the sense of judging good against bad, and consider how we, as testers, might assess or influence that quality.

Exercises

To play with the trained AIs, you'll need a Replicate account. Sign in with GitHub to do this easily. You get $10 credit, which should be enough to do several hundred experiments.

Go to JamesBooth1 or BartBooth1. The model will need to start up, which takes a 3-5 minutes, but once it's going you should be able to make several pictures a minute. To make a James, use JamesBooth1 and include a workroomprds person in your prompt. For BartBooth1, include a btknaack person in the prompt. Do be more playful with the prompt.

Try the default settings – but change the `num_outputs` to 4 to make several at a time. You'll get different pictures, of course – you'll want to make enough that you can start to see similarities between the pictures. I found I needed 50-100 to start to see patterns. As you make them, share the pictures (and maybe the prompts) on Miro. Do share your thoughts with the group.

Once we're done giggling, we'll move those pictures around on Miro into heaps, to see how we react to them.

Any good?

Make a stack of good, and a stack of bad. You might want to use the same prompt over and over, or try different prompts. What characterises a 'good' or 'bad' picture?

Differences

Make a stack of JamesBooth1 pictures and a stack of BartBooth1 pictures. Look for differences between the collections. Try to characterise those differences – how would you describe 'good' or 'bad' differences?

Training data

Have a look at the training data for JamesBooth1 and for BartBooth1. Look for things in the training data which might lead to a difference in the pictures. How would you describe the 'rules' for a 'good' set?

Tweak the prompt

As you look at the sets, you'll find an urge to change the prompt, to make the pictures 'better'. Do try changing your prompt. If you want to (try to) get a slightly-similar picture, use the same seed. You'll find the seed at the top of the log – it'll scroll by while generating; when generated you need to look for a tiny link below the pics. That sense of 'better' is an aesthetic that you're building – see if you can catch the reasons for your improvements. What insights do you get about the aesthetic you're building?

To think about: Other Models

This is StableDiffusion 1. Have you used other AIs - SD2, MidJourney, Dall-E? Would the ideas of quality expressed today work on those? Could the principles from tweaking the training data and the prompts be transferrable – and if not, what would you do?

Week 3: ChatGPT

ChatGPT is a chatbot; it responds to text with text, as a human might. It has a 'memory', in that it includes (about 70 lines of) recent conversation as part of your current prompt. It has 'context' because it's built on OpenAI's Large Language Model GPT-3.5, which itself has ingested so much text that (English) Wikipedia makes up just 3% of what it has digested. Subscriber versions have access to the internet and GPT-4.

You'll need an account with ChatGPT to contribute to today's episode. Accounts are free to open. Once you've signed up, you can use the tool through the web or an app (iOS / Android – may not be available yet).

Kickoff

I may not be around at 2 – I'm out at the moment, and might be a few minutes late!

If I'm not there (and even if I am): Please talk with each other. Get a ChatGPT account from OpenAI. Go to the Miro board, and add ideas about generative AI and testing to three piles:

- How you might / can use generative AI as a tester (especially if you already are)

- How to test a generative AI

- What might you trust a generative AI to do, and what you might be sceptical of.

As with last week, we'll work through some or all of the exercises, while thinking about developing an aesthetic; a way to distinguish 'good' from 'bad'. I also hope to draw us towards thinking about risks and emergent behaviours.

Exercises below are rough sketches – both incomplete, and excessive. They are NOT in order.

Interpret what ChatGPT invents

Here's a conversation about testing something which multiplies two two-digit binary numbers (example: 11 x 10 = 0110). What do you think about ChatGPT's usefulness as a testing assistant?

What would you do next, to test this further?

Interpret code

Here's ChatGPT interpreting code. Is comment 7 useful?

Refine prompt

/with an interesting testing prompt, refine it/

Try it out

You've heard that ChatGPT can generate data / interpret code / write tests. See what you can do!

Made-up authoritative sources

Take an area of knowledge which you know well-enough to judge citations. Ask the bot about whose ideas matter, and why. Can you see an area where you doubt it expertise? Why? How could someone less-knowledgable that you check?

Suggest tests

Is this any good? Why? Why is it not?

Multiple generation

Ask ChatGPT the same question several times.

Emergent behaviour

Link to emergent property research

Testing GPT

The following article indicates that ChatGPT (or is it GPT-4?) got worse at something over several months. Is this a reasonable assessment?

Week 4: Cody by Sourcegraph

Sourcegraph's service is to understand large codebases. Cody is their new tool, built on the Claude LLM, trained on large codebases, and trained further on your own repos. Ask Cody a question and it will base its answer on your code and how it is used in your codebase, with context and insights from it similarities and differences with other codebases. In use, it's a mix between stack overflow, and someone experienced (yet woozy) who's worked on the codebase forever.

On request, Cody will train itself on your open-source repos or 10 private repos. This training – conceptually similar to building a JamesBooth above – gives it customised insights into your code. That training means the repo is indexed, and has embeddings – and I've put those terms here without knowing quite what they mean, both in terms of how they're achieved, and what they enable.

To do this yourself, you'll need (free) Cody access, the Cody plugin working in Visual Studio, and some (simple) code which perhaps already has tests.

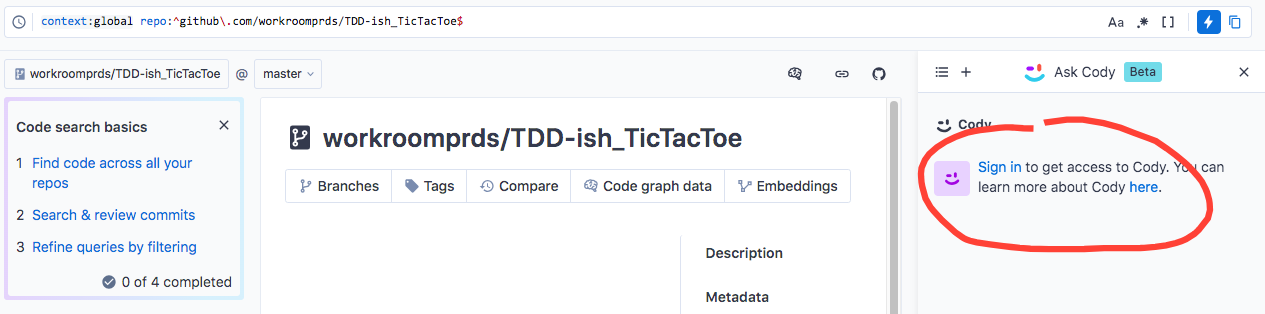

For a swift start, this copy of my code on their site lets us try the tool out together on the same codebase. You still need to register with SouceGraph (use your GitHub details) but you won't install anything. You'll not be able to use its suggestions, nor do anything on your own code.

I have stories to tell from exploring some of my larger JavaScript stuff, which was at that time without 'embeddings'. However, I want to give Cody something opensource so that we can all play with it . I also want to add 'embeddings' so that it can work as work well as possible.

To that end, we'll use my opensource GitHub repo TDD-ish Tic Tac Toe, which contains code to play Noughts-and-Crosses. I wrote it as an experiment with Python and TDD. I've given it to SourceGraph, who have given it a URL on their site. And the indexing / embeddings turned up, at the second time of asking, on Monday.

Exercises

Go to https://sourcegraph.com/github.com/workroomprds/TDD-ish_TicTacToe, Sign in to get access (use your GitHub for ease).

Exercise 1 – Explaining the Code

Select the Game.py file (bottom left). Ask Cody (bottom right) something like what does this do?.

What does this do?

This code snippet is defining a Game class to represent a Tic Tac Toe game.

Some key things it does:

- The

__init__method initializes a new Game instance, taking asizeandSurfaceclass as parameters. It creates an empty board of the given size, and stores theSurfaceclass to use for drawing the board. draw_table()calls theSurfaceclass'sdrawTable()method to draw the current state of the board.empty_board()creates an empty board by initializing a 2D list of spaces.make_change()updates the internal board representation by placing a value at a given row/column location.update_whole_board()replaces the entire internal board with a provided new board.

So in summary, this Game class stores the state of a Tic Tac Toe board, with methods to initialize it, draw it, make moves on it, and replace the entire board state. It uses a provided Surface class to handle visually drawing the board.

The test() function at the bottom shows example usage and tests some of the Game class functionality.

Now ask it how does this fit into the repo?

How does this fit with the rest of the repo?

Based on reviewing the Game.py file and the overall repository, here is how this Game class fits into the TicTacToe code:

- The Game class represents the core game state and logic. It tracks the state of the board and has methods to initialize, modify, and draw the board.

- It is designed to be independent of UI or I/O. It takes a TableMaker class in the constructor to handle visual drawing of the board, keeping the core game logic separate.

- The main TicTacToe.py imports and uses Game, TableMaker, BoardAnalyser and other classes together to build the full game application.

- TicTacToe.py creates an instance of Game and uses it to store the state of the active game board.

- It calls Game methods like make_change() and draw_table() to modify and draw the board each turn.

- The BoardAnalyser methods are used alongside Game to analyze game state each turn and determine winners etc.

- So Game represents the core board state, TableMaker handles visuals, BoardAnalyser game analysis, and TicTacToe brings it all together into a playable game app.

So in summary, the Game class provides the central board representation and mechanics, keeping the game logic isolated and reusable, while other classes handle UI, analysis, and control flow.

Holy cow.

Exercise 2 – Explaining the tests

Pick a file. Check the file has actually changed in the middle pane. Ask tell me about the tests or similar.

Game.py

The test() function at the bottom of the Game.py file contains some basic tests for the Game class:

- It creates a Game instance with a 3x3 board and the TableMaker class.

- It verifies the initial empty board is a 3x3 grid of spaces.

- It calls

make_change()to place an 'X' in position B1, and verifies this updates the board correctly by drawing it and checking against the expected string. - It calls

update_whole_board()to fully replace the board, and verifies the board matches the provided nested list.

So in summary, the tests:

- Check initialization of an empty board.

- Check updating a single cell on the board.

- Check replacing the entire board.

- Rely on

draw_table()to get a string representation of the board for assertions.

This provides some basic tests for core Game class functionality like initializing, modifying, and replacing the board data structure. More extensive tests could be added for different board sizes, invalid moves, winner detection, etc. But this is a starting point for testing the key board representation and modification logic.

BoardAnalyser.py

The BoardAnalyser.py file contains several test cases in the test() function at the bottom:

- It creates some sample 3x3 game boards representing different end states - X winning horizontally, vertically, diagonally, O winning, no winner, game still in progress.

- It instantiates a

BoardAnalyserobject. - It tests the

getItem()method to retrieve cells from the boards, including off-board coordinates. - It tests

whoWins()against the various boards to check it detects wins properly. - It tests

keep_going()to check it detects game over vs in progress. - It tests

cell_has_entry()to check detecting empty vs non-empty cells.

So in summary, the tests:

- Use a variety of sample end-state game boards to test different scenarios.

- Check core functionality like retrieving cells, detecting winners, game status.

- Focus on the methods that analyze board state, not modifying boards.

- Could be expanded with more boards, invalid inputs, different sizes, performance tests.

Overall this provides a reasonable starting set of unit tests for the key methods that need to interpret board state in different end conditions.

Exercise 3 – build me some test data

Let's try something like: build me some test data for a 5x5 board, where a line of 3 wins ... I got:

Input data for a 5x5 board

Here is some test data for a 5x5 board where there is a winning line of 3:

winning_line_board = [["X", "O", "X", "O", "X"],["O", "X", "O", "O", "O"],["X", "O", "X", "O", "X"],["O", "O", "O", "X", "O"],["X", "X", "X", "O", "O"]

]

This creates a 5x5 nested list representing a board. The first row has a winning line of 3 X's horizontally.

We could also create different winning scenarios:

# Vertical win

vertical_win_board = [["O", "X", "O", "O", "O"],["O", "X", "X", "O", "O"],["O", "X", "X", "O", "O"],["O", "O", "O", "X", "O"],["X", "X", "X", "O", "O"]

]# Diagonal win

diagonal_win_board = [["O", "O", "X", "O", "O"],["O", "X", "X", "O", "O"],["X", "X", "X", "O", "O"],["O", "O", "O", "X", "O"],["O", "O", "O", "O", "O"]

]

We can pass these sample boards into tests for BoardAnalyzer to validate win detection works properly.

You'll see some problems. Can you fine-tune your query?

Exercise 4 – Write a unit test

Below, you'll see what I get when I asked for write unit tests for getItem.

You do you.

unit tests for getItem

import unittestfrom BoardAnalyzer import BoardAnalyzerclass TestGetItem(unittest.TestCase):def setUp(self):

self.analyzer = BoardAnalyzer()def test_valid_coordinates(self):board = [['X', 'O', 'X'], ['O', 'X', 'O'], ['X', 'O', 'X']]result = self.analyzer.getItem(0, 0, board)self.assertEqual(result, 'X')def test_off_board_low(self):board = [['X', 'O', 'X'], ['O', 'X', 'O'], ['X', 'O', 'X']]result = self.analyzer.getItem(-1, -1, board)self.assertEqual(result, 'off board')def test_off_board_high(self):board = [['X', 'O', 'X'], ['O', 'X', 'O'], ['X', 'O', 'X']]result = self.analyzer.getItem(3, 3, board)self.assertEqual(result, 'off board')def test_invalid_board(self):

board = []result = self.analyzer.getItem(0, 0, board)self.assertEqual(result, 'off board')if __name__ == '__main__':unittest.main()

How do yours look? What's good? What's poor? What's different?

Exercise 5 – write tests to help me write code

To push this experience out a bit, I tried I want to use TDD to build new functionality to randomly delete a filled cell on the board, after six moves. Write tests, for a 3x3 board, that will help me to write the right code. This is what is returned...

AI-written test-first

Here are some test cases to drive development of a method to randomly delete a filled cell after 6 moves on a 3x3 TicTacToe board:

import unittestfrom Game import Gameclass TestRandomDelete(unittest.TestCase):def setUp(self):self.game = Game(3)def test_no_delete_before_6_moves(self):# Make 5 movesself.game.make_change(0, 0, 'X')self.game.make_change(1, 0, 'O')self.game.make_change(0, 1, 'X')self.game.make_change(1, 1, 'O')self.game.make_change(0, 2, 'X')# No changes yet

expected_board = [['X', 'X', ' '],['O', 'O', ' '],[' ', ' ', ' ']

]

self.assertEqual(self.game.board, expected_board)def test_delete_after_6_moves(self):# Make 6 movesself.game.make_change(0, 0, 'X')self.game.make_change(1, 0, 'O')self.game.make_change(0, 1, 'X')self.game.make_change(1, 1, 'O')self.game.make_change(0, 2, 'X')self.game.make_change(1, 2, 'O')# Delete a random filled cell

self.game.random_delete()# Board should have 1 empty cellempty_cells = sum(row.count(' ') for row in self.game.board)self.assertEqual(empty_cells, 1)if __name__ == '__main__':

unittest.main()

This tests:

- No delete before 6 moves

- Delete happens after 6 moves

- Only 1 cell deleted

- Helps drive logic for checking move counts and randomly clearing a filled cell.

I've not run or used this code. I don't know that it's even syntactically correct, let alone will help me to write code that works. However, I am delighted to see that the tool has expressed its intent...

I can see a couple of issues by inspection: the input data (5 moves) in test_no_delete_before_6_moves doesn't match the expected board (4 filled in). The delete is triggered by the test, not by the 6th move. A 6-move game on a 3x3 board, with one deleted cell, is expected to have one empty cell – I'd expect 4. These mistakes are fixable – but what haven't I noticed? Will my code be faster to write or higher quality, if I include the cost of prompting and (test) fixing, and the risks of not thinking for myself?

If I ask a broader question, around: Will I have more insight into the problem space?, then the answer is yes, for me, here, unexpectedly: It's bought home a couple of ambiguities that I might want to write tests for:

- Should the deleted cell always be one of the cells which has been filled (I'd intended it to be so)?

- Do I mean a single deletion after move 6, or a deletion every move after the 6th move (I'd intended the latter).

How has your test worked out?

Week 5 – Cody on cURL

++ POSTPONED TO 16 AUGUST ++

We'll stick with Cody for this week – of the four things we've worked with so far, it is the most-relevant to testing. And, like StableDiffusion and ChatGPT, it is a potentially vast topic to explore.

This week, we'll work with a real library. cURL is substantial, open-source, ubiquitous, and has earned a reputation of being well-tested. It has also been indexed by SourceGraph, so has full embeddings for use with Cody.

In today's workshop, we'll

- ask questions of Cody at https://sourcegraph.com/github.com/curl/curl

- access cURL's GitHub repo at https://github.com/curl/curl

I have explored, so I can suggest approaches if you ask for guidance, and will share what I've found if it becomes relevant. The two sections below act as a substitute for me, if I'm not around and you need a hand.

What I've asked Cody so far (keep this closed if you like)

I took about 120 minutes, spread over a few days. I allowed Cody’s responses, and my own interest, to set the direction – and I kept cURL's repo to hand for searching and exploring. I did not have a specific goal nor end time. These are in the order I asked.

- tell me about the architecture of this repo

- how is this repo organised?

- tell me about (a file) (a directory)

- what libraries does this depend on

- tell me about tests (in a directory) (for a component)

- Does this repo appear to use a coverage tool?

- does curl have a list of requirements?

- what are current open issues? Please list with most active first

- Summarise closed issues, listing most-active first.

- which files have seen the most reversions?

- What areas of the code seem fragile, and why do you make that judgement?

- Tell me about (something Cody has referred to), from (some artefact) in (some path)

- where can I find (a particular kind of test that Cody referred to)

- describe the (tests cody referred to)

For the following, I repeated the sequence with (roughly): something real and referred to, something cody made up yet referred to, something not real and implausible and nor referred to by cody, something not real and plausible and not referred to by cody

- where is (it)

- what does (it) do / how is it used? how does curl use it?

- tell me about (a function cody has referred to within it)

- where is (a function cody has referred to within it) used? / how is it used?

- what problems can you see in (it)

- how does this implementation of (it) differ from (something else)

For the following, I picked ‘easy handle’ because it’s something Cody referred to a few times, which existed, which could be found in the

- what is the

easy handlein this repo - show me code snippets relating to the

easy handle - where is

curl_easy_initdefined?

And then I got more general again...

- Does any of this code use an MVC pattern?

- What Gang-of-four patterns can you see in the code?

- Are there any examples of functional programming in the codebase?

- Which files change most often?

I’ve knocked out some specifics so that it’s easier to see the patterns, and so that my specifics don’t lead workshop participants down my paths. I’ll post the un-modified questions and the full responses elsewhere.

Some Test Ideas (keep this closed if you like)

- Ask Cody general questions. Isolate definitive answers that can be checked. Check its answers. Report on what you checked, and what you conclude from those checks.

- Ask Cody about the structure of the repository – is its reply coherent? Is its reply useful?

- Inspect some of Cody’s output, looking for details that can be checked directly against the repo. Ask Cody about those details, and judge what you find for consistency.

- Build a range of questions you might ask Cody. Ask questions on those topics, and judge the answers (identify criteria). For those which you judge positively, what might you use more? For those which seem unreasonable, how can you tell? What would you recommend avoiding?

- Ask some general questions. From the answers, ask more-refined questions. Follow leads. Report on your conclusions. Also, review your questions and describe the next place to explore.

- In what ways does Cody’s answer reflect the file you have selected?

- What does Cody explicitly refuse to give answers about? What does it indicate lower confidence in, and how? What does it typically answer confidently? Give examples, and indications from the repo about whether its answer reflects it confidence.

- In what way (if any) is the ‘files read’ useful? What might it indicate?

- List a few search targets. Use Cody to search, and compare with searching the repo directly. Does Cody find all / some / a few instances? Does everything it finds exist?

- SourceGraph have ‘indexed’ this repo – what can Cody tell you about the repo itself?

- Compare Cody’s descriptions of repo-level information with its descriptions of files, functions and tests?

- Exchange questions with Cody for 20 minutes about the architecture / plumbing of cURL. Does it seem helpful? Check out what it says – is it accurate?

- Exchange questions with Cody for 20 minutes about a specific file / limited function / small directory. Does it seem helpful? Check out what it says – is it accurate?

- Read about cURL on external sites. Ask Cody questions which should give you information relevant to those sources.

And, to get some examples of things we know that generative AIs do, keep an eye out / divert towards exploring...

- Find an example of something (file, function, test, facility) which Cody refers to, but which does not exist in the repo. Build around that example; ask for details, comparisons, code, advice. Seek ridiculous examples – bugfixes in non-existent code.

- Find an example of code that is offered by Cody, but which is not in the repo

- Find an example of something that Cody describes 'confidently', but which is wrong.

- Find an example of poor advice – if Cody suggests making changes that would be counterproductive

- Generated tests and test data that (in some obvious and real sense) don't work...

Transcript

Here's a rough transcript of my interaction with Cody while looking at the repo for cURL.

Week 6 – AI and Testing and Ethics...

This week, we have an open conversation to grasp the necessary and knotty nettle of ethics.

Conversational starters – if we need them

Not to be done in order.

- If you're testing an AI, are you testing more in terms of with aesthetics (judging good vs bad) or ethics (good vs evil)? Is this different to what we've been doing?

- Miro board: add sticky notes for specific and real situations where AI has had / is having an undesirable effect. Try for several each. If you can add a reference / link, so much the better. We'll shuffle them, based on... could this have been anticipated? could this have been avoided? who could have noticed? who could have taken action?

- As a tester, how do you feel about

- writing a test strategy with ChatGPT?

- paying attention to a test strategy written with ChatGPT?

- working as a tester (finding value / seeking risks) on a product which uses AI to:

- offer medical advice

- identify people by their faces

- write adverts

- take on the appearance of a person

- reviewing a general AI, as a tester, for use in one of those specific products?

- using an AI to do some of your job? To replace some of your peers?

- writing a test strategy with ChatGPT?

- What are our responsibilities when working with AIs, teams who build AIs, and people with purposes that are fulfilled by AIs?

- As testers, do we have any responsibility for the behaviour of the machines we work on?

- As people, do we have any responsibility for the moral choices of the teams we work with?

- Do devs (inc. testers) of AIs have a responsibility for transparency?

Too revealing?

- Where do you draw your own line on what you would / would not work on (if your personal circumstances stayed the same)

- What would be ethically unacceptable for another tester to do? How would you react if a colleague did something like that?

Comments

Sign in or become a Workroom Productions member to read and leave comments.